In September 1989, I presented a paper entitled "Usability Engineering at a Discount" at the 3rd International Conference on Human-Computer Interaction in Boston. In so doing, I officially launched the discount usability movement, though I'd been working out the ideas during the 2 years prior to that talk.

The idea, like many, was born of necessity. As a university professor in the 1980s I had a much smaller budget than, say, the IBM User Interface Institute at the T.J. Watson Research Center, where I'd worked before rejoining academia. Clearly, I couldn't do the same kind of high-budget usability projects that the fancy labs did, so I turned this to my advantage and started developing a methodology for cheap usability.

My 1989 paper advocated 3 main components of discount usability:

- Simplified user testing, which includes a handful of participants, a focus on qualitative studies, and use of the thinking-aloud method. Although thinking aloud had been around for years before I turned it into a discount method, the idea that testing 5 users was "good enough" went against human factors orthodoxy at the time.

- Narrowed-down prototypes — usually paper prototypes — that support a single path through the user interface. It's much faster to design paper prototypes than something that embodies the full user experience. You can thus test very early and iterate through many rounds of design.

- Heuristic evaluation in which you evaluate user interface designs by inspecting them relative to established usability guidelines.

It might be hard to appreciate today when many people talk about "lean UX," but these ideas were heresy 20 years ago. The gold standard then was elaborate (and expensive) studies with quantitative metrics. Even now, I appreciate the place of this older approach, and we sometimes run benchmark studies for both our independent research and for bigger clients who want to track metrics despite the expense. But quant should be the exception and qual the rule.

That I advocated simplified methods was bad enough; I also had the gall to celebrate them. People were supposed to be ashamed when forced to run a cheap study, but in my 1989 paper, I boasted about applying discount usability in two financial-sector case studies: one that redesigned bank account information and the other that redesigned information about individual retirement accounts (IRAs). For the bank account project, I tested 8 different versions of the design; in the IRA project, I tested 11 different versions. These extensive iterations were completed in 90 hours in the first case and 60 hours in the second. Both projects had great results and were possible only with discount methods.

Discount usability often gives better results than deluxe usability because its methods drive an emphasis on early and rapid iteration with frequent usability input.

So, from today's standpoint, can I claim victory? Although my 20 years of campaigning for discount usability have certainly not been in vain, I can't yet declare a win:

- Most companies still waste their money testing more than 5 users per usability testing round. My 1989 paper actually advocated testing 3 users, which usually gives the highest ROI. I've since backtracked from this radical position and now advocate 5 users as a compromise that will work in most organizations, even if they're not as fast-moving as I envisioned designers to be in 1989.

- People still pay far more attention to questionable quantitative studies than they do to simpler qualitative studies that have much greater validity.

- Most design teams still don't believe in paper prototyping, preferring instead to spend considerable time creating elaborate design representations before they start collecting user feedback.

- Many people still reject the value of usability guidelines and of heuristic evaluation, even though it's become the second-most popular usability method.

- While discount usability methods are a perfect match for Agile development projects, many companies adopted Agile without taking on the accompanying discount usability. (Result: confused UX.)

Robustness of Usability Methods

Another, greater controversy surrounded my claim that usability methods are robust enough to offer decent results even when you don't use perfect research methodology. Obviously, the best methods deliver the best results, and I've spent much of the last 26 years teaching people the correct usability techniques.

But even people who aren't dedicated usability specialists and don't use the refined approach I demand of people I hire for my team can still conduct user studies. For example, designers can do their own user testing. I've seen countless examples of the value of less-than-perfect methodology. In the spirit of celebrating discount usability's anniversary, here's data from a 1988 study I did while I was evolving the thinking behind the 1989 paper:

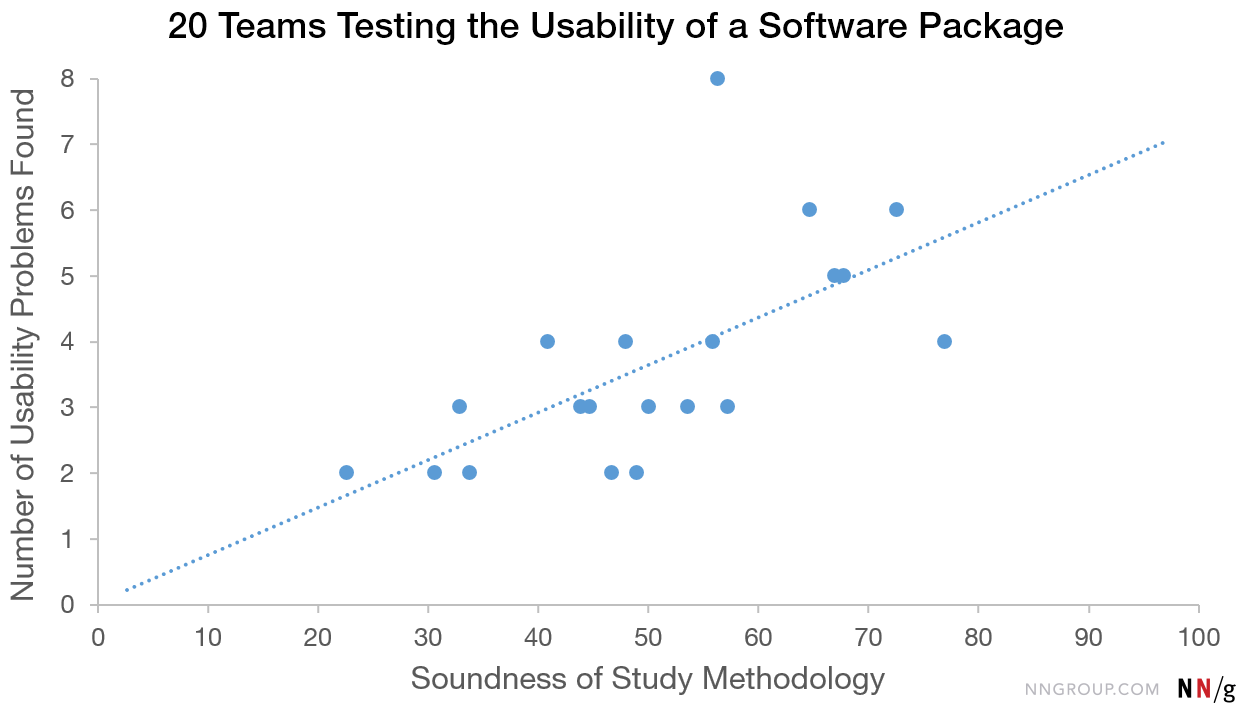

In this chart, each of the 20 dots represents a team that ran a usability study of MacPaint 1.7 (an early drawing program). Each team tested 3 users, and I judged how well they ran the study on a 0–100 scale, with 100 representing my model of the ideal test. In total, the software package had 8 major usability problems (along with more smaller issues that I didn't analyze).

The chart clearly shows that teams using better methodology tended to find more usability problems. In fact, the study-quality measure accounted for 58% of the variability in the number of identified usability problems. (The other 42% was determined by the talent of the team members — and probably a bit of pure luck.)

So yes, it does pay to take a course on proper usability testing :-)

Better usability methodology does lead to better results, at least on average. But the very best performance was recorded for a team that only scored 56% on compliance with best-practice usability methodology. And even teams with a 20–30% methodology rating (i.e., people who ran lousy studies) still found 1/4 of the product's serious usability problems.

Finding two serious usability problems in your design is well worth doing, particularly if you can do so after testing only three users — basically, an afternoon's work. Of course, I'd rather that you get a usability expert to dig even deeper, but even so, two is infinitely more than zero (the amount of usability knowledge you'd accrue without any testing).

Bad user testing beats no user testing.

This last point is still not widely accepted, and discount usability doesn't yet rule the world of user interface design. But it has come a long way since I launched it to a lecture room of maybe 100 or so people in 1989. On balance, I'm happy that I started this campaign and will continue the fight for simpler usability, more broadly applied.

Share this article: